In this Blog i we will discus how to write UDF for PIG script . UDF is stand for (User Define Function) which is like normal function we use in our program .

You can write UDF in java and some other language. There are few steps you have to keep in mind for write your UDF :

Steps are as Follows :

1) Download Following Jar File :

commons-lang3-3.1.jar

commons-logging-1.1.3.jar

Pig.jar

all the above file you can search on google.

2) Create a new class for your UDF in the eclipse or any type you want. In this tutorial i am using eclipse. After creating class put the following code in the class :

code :

import java.io.IOException;

import org.apache.pig.EvalFunc;

import org.apache.pig.data.Tuple;

public class UPPER extends EvalFunc<String>

{

public String exec(Tuple input) throws IOException {

if (input == null || input.size() == 0)

return null;

try{

String str = (String)input.get(0);

return str.toUpperCase();

}catch(Exception e){

throw new IOException("Caught exception processing input row ", e);

}

}

}

in the above code EvalFunc<String> type of UDF we want to create there are four kind of UDF we can create in bottom of this tutorial i provide a video link for that more detail. In UDF every input is come in the from of tuple and EvalFunc is the UDF which return the same value which it get as a input.

The exec it is function which EvalFunc call as default.

3) Create the jar of your class and save it to any path you want in my case i am using Ubuntu so i am save it on my home folder. In eclipse you can create jar by right click on project -> properties -> Export .

4) Then now time to add jar in the pig. in my previous blog mention the step that how to start pig on the Ubuntu terminal you can follow that blog for pig basic.

after enter in pig grunt shell we have to write following script for add our Jar.

grunt>register udf1.jar;

here register is the keyword which use to add jar in pig script in my case i am having it on the home folder so i am not providing the path of the jar but you can edit it for your path like :

register "your_path_of_jar";

5) Then have to load the file for get data for our working. We can call file in pig script by following pig script:

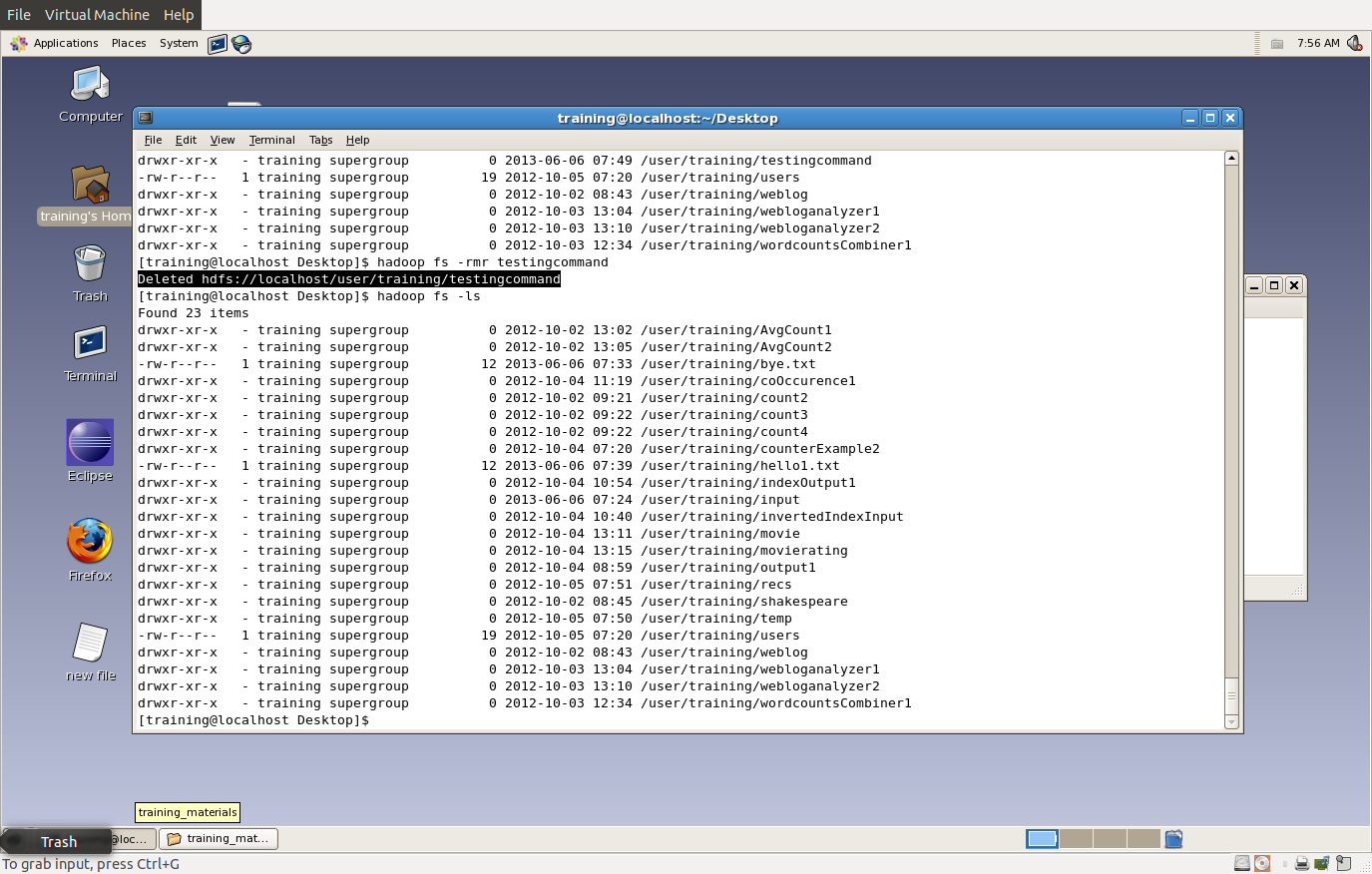

grunt> A = LOAD 'stu1.txt' as (name:chararray);

in above code stu1.txt is my file on my hadoop HDFS .Pig use Hadoop HDFS as default you can edit it with your path of your file.

6) Next thing is we have to add our UDF with our pig script. We can add our UDF in pig script by following code :

grunt> B = FOREACH A GENERATE UPPER(name);

in the above code UPPER is the name of class which we have in our jar our UDF will be the same name of our class and we pass name ass the argument.

7) Then for see the data we use simple DUMP for that like:

grunt> DUMP B;

we have data in our stu1.txt is:

simmant

mohan

rohan

then output is :

(SIMMANT)

(MOHAN)

(ROHAN)

Reference for Today's Blog is:

for UDF Detail

1) https://www.youtube.com/watch?v=OoFNQDpcWR0

for PIG Detail

2) http://wiki.apache.org/pig/PigBuiltins

for UDF manual

3) http://wiki.apache.org/pig/UDFManual

Tuesday 18 June 2013

Friday 14 June 2013

Pig for Beginners

Pig Scripting Language:

How to start code on pig

1) Type Pig on terminal

$ pig

2) Then The shell is open called 'grunt' shell

grunt>

3) Try an samle code for get text from text file in the grunt shell. One thing Pig use your HDFS for working with data so better you 1st copy your data on the HDFS and the use data:

grunt>A = LOAD 'data.txt' USING PigStorage(',') AS (name:chararray, class:chararray, age:int);

In above command LOAD is pig function which is using for load file in pig script USING is key word when we use some predefine functions of pig here we use 'PigStorage' which is use for to filter the data in terms of symbols like:

stu1,1st,4

stu2,2nd,3

stu3,3rd,2

so they assigen coloums according to the data by this ',' mark. (name:chararray, class:chararray, age:int) This is an name of our field which we gona use in our code.

4) Then we have to implement our script for filter the data like .

grunt> B = FILTER A BY age<4;

Here is FILTER is use to filter the data from the field name age.

5) Then we have to what is the out put of our script for that we use the DUMP.

grunt> DUMP B;

It give The following out put .

output:

(stu2,2nd,3)

(stu3,3rd,2)

For Basic Follow the Following Links:

https://cwiki.apache.org/PIG/pigtutorial.html

https://www.youtube.com/watch?v=OoFNQDpcWR0

http://www.orzota.com/pig-for-beginners/

How to start code on pig

1) Type Pig on terminal

$ pig

2) Then The shell is open called 'grunt' shell

grunt>

3) Try an samle code for get text from text file in the grunt shell. One thing Pig use your HDFS for working with data so better you 1st copy your data on the HDFS and the use data:

grunt>A = LOAD 'data.txt' USING PigStorage(',') AS (name:chararray, class:chararray, age:int);

In above command LOAD is pig function which is using for load file in pig script USING is key word when we use some predefine functions of pig here we use 'PigStorage' which is use for to filter the data in terms of symbols like:

stu1,1st,4

stu2,2nd,3

stu3,3rd,2

so they assigen coloums according to the data by this ',' mark. (name:chararray, class:chararray, age:int) This is an name of our field which we gona use in our code.

4) Then we have to implement our script for filter the data like .

grunt> B = FILTER A BY age<4;

Here is FILTER is use to filter the data from the field name age.

5) Then we have to what is the out put of our script for that we use the DUMP.

grunt> DUMP B;

It give The following out put .

output:

(stu2,2nd,3)

(stu3,3rd,2)

For Basic Follow the Following Links:

https://cwiki.apache.org/PIG/pigtutorial.html

https://www.youtube.com/watch?v=OoFNQDpcWR0

http://www.orzota.com/pig-for-beginners/

Thursday 13 June 2013

Importing data of Mysql table into the Hive

1) Creating Mysql Database;

>create database test;

2) Use the current database

>use test;

3) create table in the database

>create table test1 (name text,id int,password text, primary key(id));

//note Hive only accept the table which have Primary key so before going to the Importing table in the Hive Table check you table syntax one's

4) Insert data into table;

>insert into test1 values('test',2,'test');

5) Now we had created the database name of test and table in that database is test2 with fields name text, id int , password text. After all thing done in the mysql lets move to the Sqoop;

6) Type following command on the terminal :

$ sqoop import --connect jdbc:mysql://localhost/test --username 'your username' --password 'your password' --table test1 --hive-import

7) In the above command i use database which we had created above name test and table test1 you can you use your database and table then press Enter

8) Now type 'hive' on the terminal:

$ hive

9) Then type your query see the value in the table :

select * from test1;

This all steps if you already have your database then start from step 6 :)

>create database test;

2) Use the current database

>use test;

3) create table in the database

>create table test1 (name text,id int,password text, primary key(id));

//note Hive only accept the table which have Primary key so before going to the Importing table in the Hive Table check you table syntax one's

4) Insert data into table;

>insert into test1 values('test',2,'test');

5) Now we had created the database name of test and table in that database is test2 with fields name text, id int , password text. After all thing done in the mysql lets move to the Sqoop;

6) Type following command on the terminal :

$ sqoop import --connect jdbc:mysql://localhost/test --username 'your username' --password 'your password' --table test1 --hive-import

7) In the above command i use database which we had created above name test and table test1 you can you use your database and table then press Enter

8) Now type 'hive' on the terminal:

$ hive

9) Then type your query see the value in the table :

select * from test1;

This all steps if you already have your database then start from step 6 :)

Subscribe to:

Posts (Atom)